An introduction to mrmr

intro-to-mrmr.RmdThe mrmr package is designed to simplify and expedite mark recapture data processing, parameter estimation, and visualization for a narrow class of mark recapture data (namely, those collected by the Sierra Nevada Amphibian Research Group).

Workflow overview

The typical workflow involves three steps:

- Cleaning mark recapture data with

mrmr::clean_data() - Fitting a mark recapture model with

mrmr::fit_model() - Visualizing results with

mrmr::plot_model()

Data specifications

The package comes with example data that illustrate the expected format. Three files are needed.

The first file specifies capture data:

library(dplyr)

#>

#> Attaching package: 'dplyr'

#> The following objects are masked from 'package:stats':

#>

#> filter, lag

#> The following objects are masked from 'package:base':

#>

#> intersect, setdiff, setequal, union

library(readr)

library(tibble)

library(rstan)

#> Loading required package: StanHeaders

#> Loading required package: ggplot2

#> rstan (Version 2.21.2, GitRev: 2e1f913d3ca3)

#> For execution on a local, multicore CPU with excess RAM we recommend calling

#> options(mc.cores = parallel::detectCores()).

#> To avoid recompilation of unchanged Stan programs, we recommend calling

#> rstan_options(auto_write = TRUE)

library(mrmr)

captures <- system.file("extdata",

"capture-example.csv",

package = "mrmr") %>%

read_csv

#> Rows: 466 Columns: 19

#> ── Column specification ────────────────────────────────────────────────────────

#> Delimiter: ","

#> chr (9): swab_id, surveyor1, pit_tag_status, frog_sex, frog_state, swabber_...

#> dbl (7): pit_tag_id, site_id, frog_weight, frog_svl, collection_location, r...

#> date (3): survey_date, collection_date, release_date

#>

#> ℹ Use `spec()` to retrieve the full column specification for this data.

#> ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.

glimpse(captures)

#> Rows: 466

#> Columns: 19

#> $ swab_id <chr> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA…

#> $ pit_tag_id <dbl> 9.00043e+14, 9.00043e+14, 9.00043e+14, 9.00043e+14…

#> $ site_id <dbl> 70449, 70449, 70449, 70449, 70449, 70449, 70449, 7…

#> $ survey_date <date> 2014-08-07, 2015-08-16, 2014-07-11, 2015-08-15, 2…

#> $ surveyor1 <chr> "Killion", "Killion", "Demaranville", "Lindauer", …

#> $ pit_tag_status <chr> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA…

#> $ frog_sex <chr> "M", "F", "M", "M", "F", "F", "M", "M", "F", "F", …

#> $ frog_state <chr> "Healthy", "Healthy", "Healthy", "Healthy", "Healt…

#> $ frog_weight <dbl> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA…

#> $ frog_svl <dbl> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA…

#> $ swabber_name <chr> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA…

#> $ treatment <chr> "unexposed", "exposed", "unexposed", "unexposed", …

#> $ collection_date <date> 2014-06-27, 2014-06-23, 2014-06-27, 2014-06-27, 2…

#> $ collection_location <dbl> 72996, 72996, 72996, 72996, 72996, 72996, 72996, 7…

#> $ release_location <dbl> 70449, 70449, 70449, 70449, 70449, 70449, 70449, 7…

#> $ release_date <date> 2014-06-28, 2014-06-28, 2014-06-28, 2014-06-28, 2…

#> $ standard_type <chr> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA…

#> $ load <dbl> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA…

#> $ category <chr> "translocated", "translocated", "translocated", "t…Note that the system.file function generates a path to the capture-example.csv file, but in a real application, you would likely use a path to a local file (real data, not the example data included in the package). The files must be formatted with the same columns and conventions uesd in the example data. Each row in this file represents a capture or recapture event.

The second file specifies translocation data:

translocations <- system.file("extdata",

"translocation-example.csv",

package = "mrmr") %>%

read_csv

#> Rows: 80 Columns: 17

#> ── Column specification ────────────────────────────────────────────────────────

#> Delimiter: ","

#> chr (9): swab_id, treatment, pit_tag_status, frog_sex, frog_state, frog_loc...

#> dbl (6): pit_tag_id, frog_weight, frog_svl, quant_cycle, start_quant, load

#> date (2): release_date, swabbing_date

#>

#> ℹ Use `spec()` to retrieve the full column specification for this data.

#> ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.

glimpse(translocations)

#> Rows: 80

#> Columns: 17

#> $ swab_id <chr> "RKS16752", "RKS16755", "RKS16756", "RKS16757", "RKS167…

#> $ pit_tag_id <dbl> 9.00043e+14, 9.00043e+14, 9.00043e+14, 9.00043e+14, 9.0…

#> $ treatment <chr> "exposed", "exposed", "exposed", "exposed", "exposed", …

#> $ release_date <date> 2014-06-28, 2014-06-28, 2014-06-28, 2014-06-28, 2014-0…

#> $ swabbing_date <date> 2014-06-23, 2014-06-23, 2014-06-23, 2014-06-23, 2014-0…

#> $ pit_tag_status <chr> "Y", "Y", "Y", "Y", "Y", "Y", "Y", "Y", "Y", "Y", "Y", …

#> $ frog_sex <chr> "F", "F", "M", "M", "M", "F", "F", "F", "M", "F", "F", …

#> $ frog_state <chr> "Healthy", "Healthy", "Healthy", "Healthy", "Healthy", …

#> $ frog_location <chr> "in lake", "in lake", "in lake", "in lake", "in lake", …

#> $ frog_weight <dbl> 11, 13, 12, 10, 10, 10, 11, 13, 13, 11, 10, 11, 11, 10,…

#> $ frog_svl <dbl> 46, 51, 44, 42, 44, 40, 45, 48, 49, 47, 48, 47, 46, 44,…

#> $ swabber_name <chr> "Kauffman", "Kauffman", "Kauffman", "Kauffman", "Kauffm…

#> $ frog_comments <chr> "Treated, for translocation to 70449.", "Treated, for t…

#> $ quant_cycle <dbl> 35.72, 26.40, 28.51, 34.27, 36.12, 28.55, 31.44, 27.37,…

#> $ start_quant <dbl> 0.09, 51.65, 12.33, 0.25, 0.07, 12.03, 1.68, 26.74, 0.4…

#> $ standard_type <chr> "genomic", "genomic", "genomic", "genomic", "genomic", …

#> $ load <dbl> 432, 247920, 59184, 1200, 336, 57744, 8064, 128352, 196…Each row corresponds to a translocation event of one unique individual.

The third file specifies survey-level data:

surveys <- system.file("extdata",

"survey-example.csv",

package = "mrmr") %>%

read_csv

#> Rows: 51 Columns: 4

#> ── Column specification ────────────────────────────────────────────────────────

#> Delimiter: ","

#> dbl (3): primary_period, secondary_period, survey_duration

#> date (1): survey_date

#>

#> ℹ Use `spec()` to retrieve the full column specification for this data.

#> ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.

glimpse(surveys)

#> Rows: 51

#> Columns: 4

#> $ survey_date <date> 2014-06-28, 2014-07-10, 2014-07-11, 2014-07-12, 2014…

#> $ primary_period <dbl> 1, 2, 2, 2, 3, 3, 3, 4, 4, 4, 5, 5, 5, 6, 6, 6, 7, 7,…

#> $ secondary_period <dbl> 0, 1, 2, 3, 1, 2, 3, 1, 2, 3, 1, 2, 3, 1, 2, 3, 1, 2,…

#> $ survey_duration <dbl> 0.00, 7.61, 7.00, 5.35, 7.33, 7.58, 8.16, 7.58, 7.85,…Here, each row is a unique survey.

Data processing

To load and process the data, provide paths to the data files as arguments to the mrmr::clean_data() function:

data <- clean_data(captures, surveys, translocations)The output provides data frames as list elements, and a list element called stan_d, which contains data that has been pre-processed for model fitting.

Including detection/non-detection covariates

Survey-level covariates that might affect the probability of detection can be included via the capture_formula argument to clean_data. Note: it is a good idea to ensure that any continuous covariates are rescaled prior to using them as covariates to have mean = 0 and unit standard deviation. This can be acheived by adding a column to the captures data Using a covariate value like year, which typically has a mean in the thousands and a standard deviation far from one will almost assurredly result in numerical issues when fitting a model.

Assuming we wanted to include a covariate for detection like person_hours, we can do so as follows:

# center and scale the covariate

captures <- captures %>%

mutate(c_person_hours = c(scale(person_hours)))

# specify the formula

data <- clean_data(captures, surveys, translocations,

capture_formula = ~ c_person_hours)Including survival covariates

Individual-level survival covariates can be included optionally via the survival_formula and survival_fill_value arguments. Both of these must be specified, because covariate values for pseudo-individuals must be filled in (they are never observed). So, for example, to evaluate the effect of an experimental treatment, if some individuals belong to a “treatment” group and others belong to an “control” group, then a group must be specified as a fill value (e.g., “wild-caught” or “control”, depending on the experiment):

# specify the formula

data <- clean_data(captures, surveys, translocations,

survival_formula = ~ treatment,

survival_fill_value = c(treatment = "wild-caught"))Model structure

The mrmr package implements a Bayesian open-population Jolly-Seber mark recapture model with known additions to the population (introduced adults). The model tracks the states of \(M\) individuals that comprise a superpopulation made up of real and pseudo-individuals (see Joseph and Knapp 2018, Ecosphere for details).

We assume a robust sampling design where the states of individuals are constant within primary periods, which have repeat secondary periods within them (during which observations are made). The possible states of individuals include “not recruited”, “alive”, and “dead”. The possible observations of individuals include “detected” and “not detected”. We assume that individuals that are in the “not recruited” or “dead” states are never detected (i.e., there are no mistakes in the individual PIT tag ID records).

Model fitting

To fit a mark-recapture model, use mrmr::fit_model(). This model accounts for known introductions into the population, and has random effects to account for variation in survival and recruitment through time. The example below uses two chains and relatively few iterations for speed, but it is a good idea to run 3+ chains, and at least 1000 iterations.

mod <- fit_model(data, cores = parallel::detectCores(),

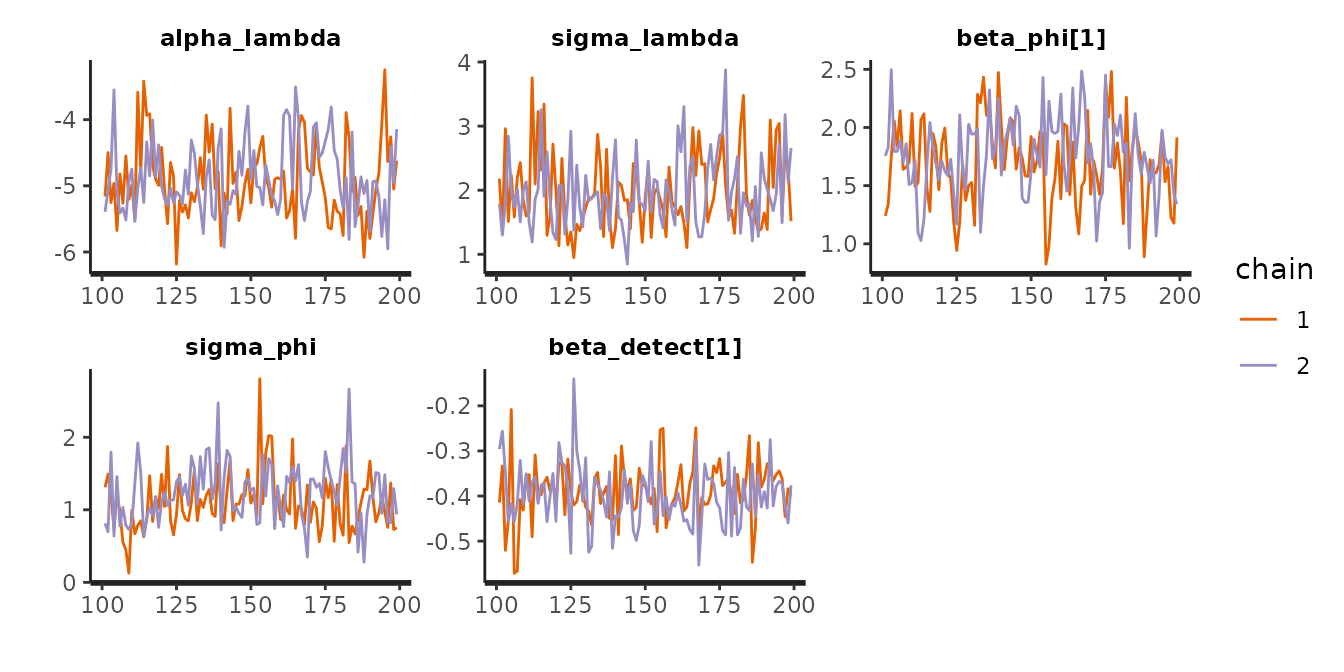

chains = 2, iter = 200)To diagnose potential non-convergence of the MCMC algorithm, inspect traceplots:

pars_to_plot <- c('alpha_lambda',

'sigma_lambda',

'beta_phi',

'sigma_phi',

'beta_detect')

traceplot(mod$m_fit, pars = pars_to_plot)

It is also a good idea to look at Rhat estimates to see whether they indicate of a lack of convergence (Rhat values \(\geq\) 1.01):

print(mod$m_fit, pars = pars_to_plot)

#> Inference for Stan model: twostate.

#> 2 chains, each with iter=200; warmup=100; thin=1;

#> post-warmup draws per chain=100, total post-warmup draws=200.

#>

#> mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

#> alpha_lambda -4.89 0.06 0.56 -5.81 -5.26 -4.96 -4.56 -3.79 100 0.99

#> sigma_lambda 1.98 0.05 0.58 1.14 1.55 1.87 2.35 3.26 135 1.01

#> beta_phi[1] 1.72 0.03 0.35 0.98 1.52 1.72 1.95 2.43 106 1.01

#> sigma_phi 1.17 0.05 0.42 0.55 0.86 1.13 1.42 2.02 83 1.01

#> beta_detect[1] -0.39 0.00 0.07 -0.52 -0.43 -0.40 -0.35 -0.26 222 1.00

#>

#> Samples were drawn using NUTS(diag_e) at Tue Aug 17 21:21:23 2021.

#> For each parameter, n_eff is a crude measure of effective sample size,

#> and Rhat is the potential scale reduction factor on split chains (at

#> convergence, Rhat=1).Built-in visualizations

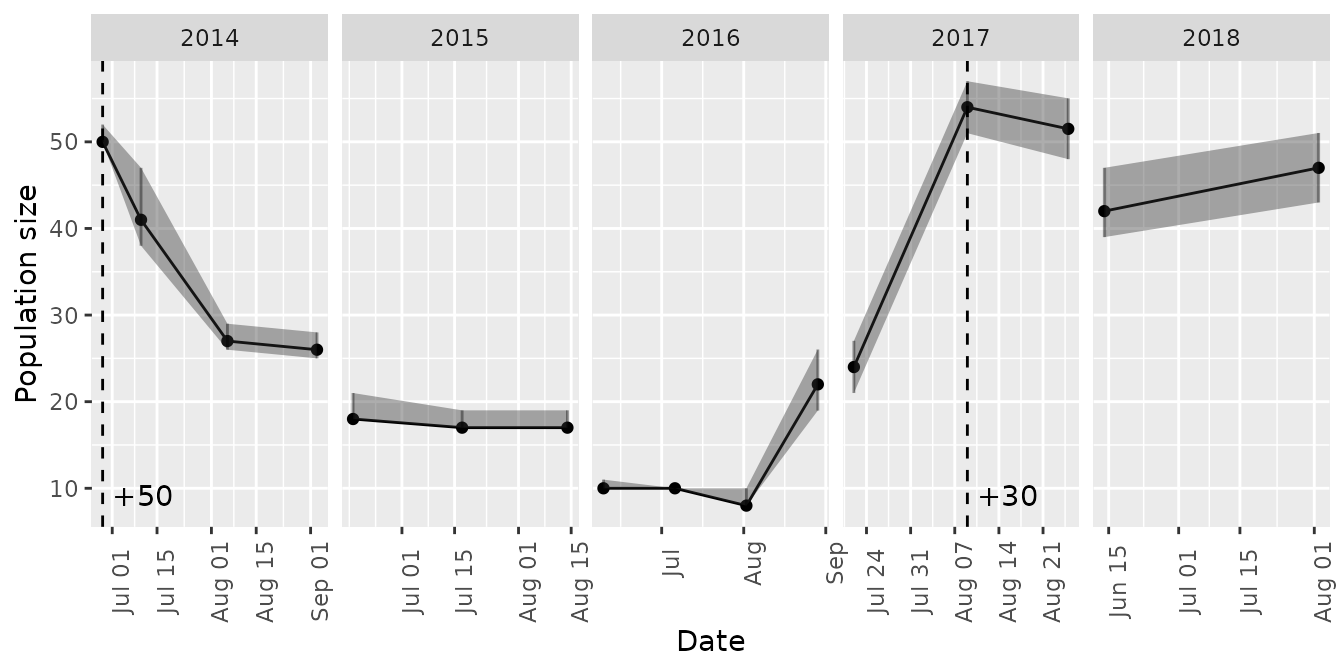

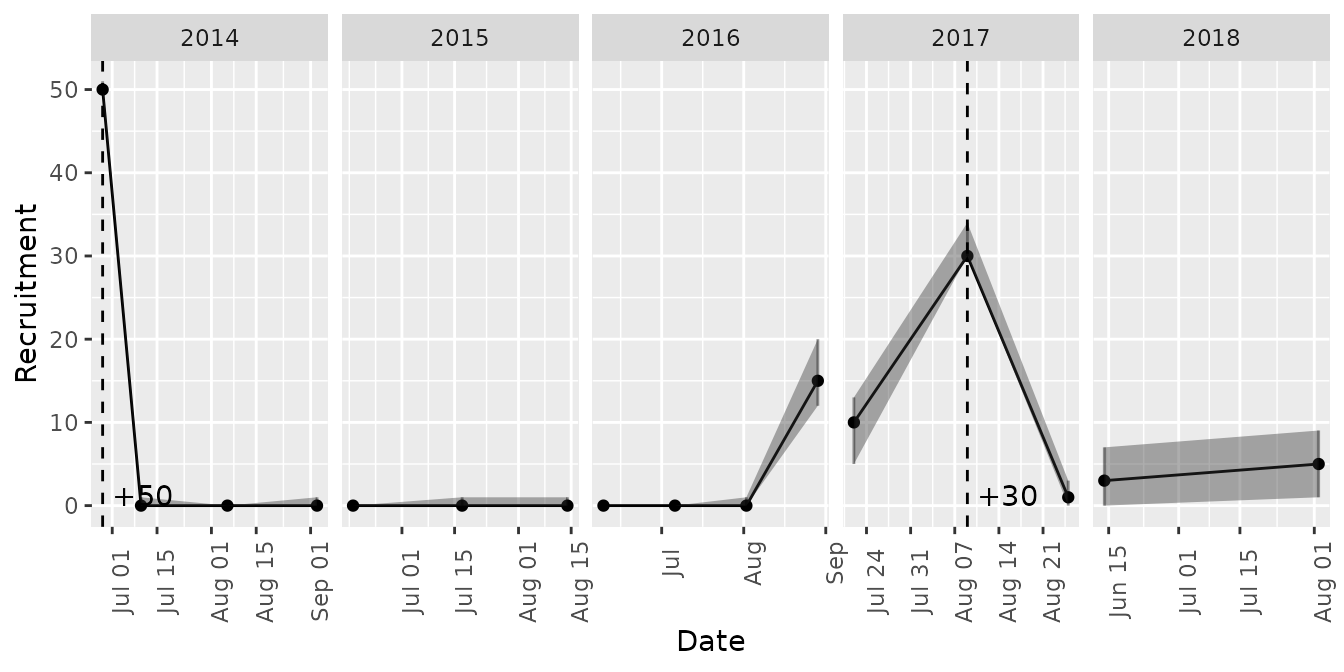

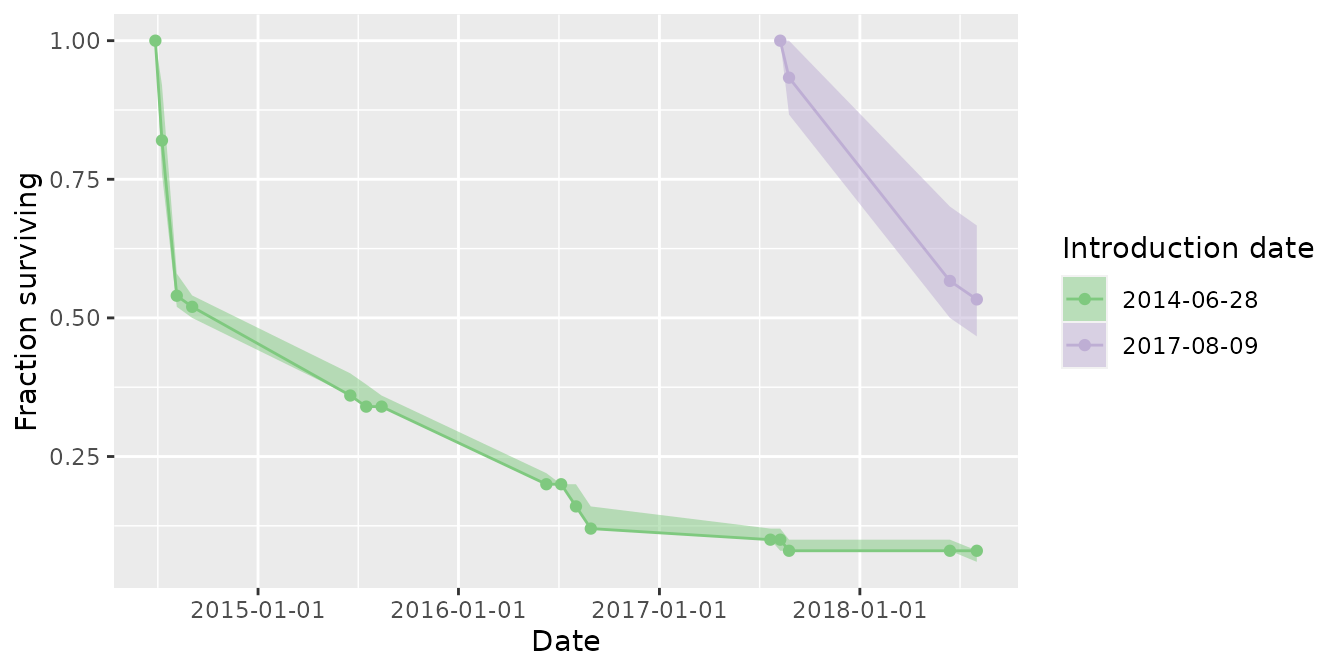

Time series of abundance, recruitment, and survival of introduced cohorts are available through the mrmr::plot_model() function.

plot_model(mod, what = 'abundance')

#> Joining, by = "primary_period"

plot_model(mod, what = 'recruitment')

#> Joining, by = "primary_period"

plot_model(mod, what = 'survival')

#> Joining, by = "pit_tag_id"

#> Joining, by = "primary_period"

Translocation survival tables

If individuals have been translocated into sites, you can generate a table of estimated survival through time for each cohort (release date) as a function of years since introduction:

survival_table(mod)

#> Joining, by = "pit_tag_id"

#> Joining, by = "primary_period"

#> # A tibble: 7 × 5

#> years_since_introduction release_date lo_survival median_survival hi_survival

#> <dbl> <date> <dbl> <dbl> <dbl>

#> 1 0 2014-06-28 0.6 0.627 0.673

#> 2 0 2017-08-09 0.867 0.933 1

#> 3 1 2014-06-28 0.347 0.353 0.374

#> 4 1 2017-08-09 0.500 0.55 0.667

#> 5 2 2014-06-28 0.17 0.175 0.19

#> 6 3 2014-06-28 0.0867 0.0933 0.113

#> 7 4 2014-06-28 0.07 0.08 0.0903You can also get estimates of survival through time by individual or cohort:

survival_table(mod, by_cohort = TRUE)

#> Joining, by = "pit_tag_id"

#> Joining, by = "primary_period"

#> # A tibble: 7 × 5

#> years_since_introduction release_date lo_survival median_survival hi_survival

#> <dbl> <date> <dbl> <dbl> <dbl>

#> 1 0 2014-06-28 0.6 0.627 0.673

#> 2 0 2017-08-09 0.867 0.933 1

#> 3 1 2014-06-28 0.347 0.353 0.374

#> 4 1 2017-08-09 0.500 0.55 0.667

#> 5 2 2014-06-28 0.17 0.175 0.19

#> 6 3 2014-06-28 0.0867 0.0933 0.113

#> 7 4 2014-06-28 0.07 0.08 0.0903

survival_table(mod, by_individual = TRUE)

#> Joining, by = "pit_tag_id"

#> Joining, by = "primary_period"

#> # A tibble: 310 × 6

#> years_since_introduction release_date pit_tag_id lo_survival median_survival

#> <dbl> <date> <chr> <dbl> <dbl>

#> 1 0 2014-06-28 900043000104502 1 1

#> 2 0 2014-06-28 900043000104505 1 1

#> 3 0 2014-06-28 900043000104510 1 1

#> 4 0 2014-06-28 900043000104512 0.333 0.333

#> 5 0 2014-06-28 900043000104513 0 0

#> 6 0 2014-06-28 900043000104516 0.333 0.333

#> 7 0 2014-06-28 900043000104520 0 0

#> 8 0 2014-06-28 900043000104521 0.333 0.333

#> 9 0 2014-06-28 900043000104522 1 1

#> 10 0 2014-06-28 900043000104524 1 1

#> # … with 300 more rows, and 1 more variable: hi_survival <dbl>Custom visualizations

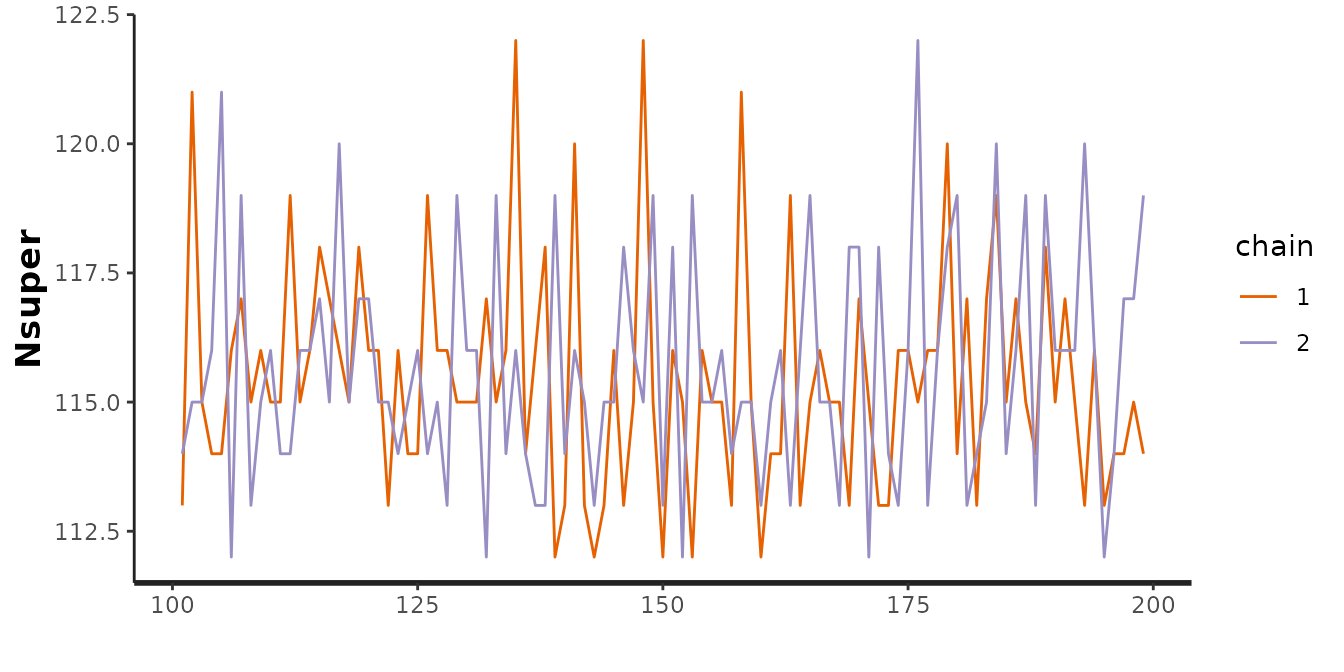

Any of the plotting functionality that you would expect from a stanfit model is available as well, by accessing the m_fit list element from a model object. For example, we could assess the posterior for the superpopulation size:

traceplot(mod$m_fit, pars = "Nsuper")

Cases with no translocations

In some cases, there may not be any introductions of animals into a population. The only difference in implementation in such cases is that the translocations argument to clean_data() can be omitted:

data_no_trans <- clean_data(captures, surveys)

mod_no_trans <- fit_model(data_no_trans)Cases with known removals

When individuals are removed from a population, e.g., for translocation, these known removals can be included as data to increase the precision of abundance estimates. An example dataset fitting this case is included at an imaginary site called “Equid”. Note below that we filter out dead animals – any dead animals encountered on surveys should be in the removals data, and not in the captures data frame, which should only contain live animals (those that might survive to the next primary period).

captures <- system.file("extdata",

"equid-captures.csv",

package = "mrmr") %>%

read_csv %>%

filter(capture_animal_state != 'dead')

surveys <- system.file("extdata",

"equid-surveys.csv",

package = "mrmr") %>%

read_csv

removals <- system.file("extdata",

"equid-removals.csv",

package = "mrmr") %>%

read_csv

d <- clean_data(captures, surveys, removals = removals)

m <- fit_model(d, cores = 2, chains = 2)